The user interface of the future

Artificial intelligence reacts to emotions

Devices and software are using artificial intelligence to learn to react to user emotions. This will dramatically change the user interface of the future.

Text: Hansjörg Honegger,

E-readers are really practical: Lightweight, a memory full of books, and some are even constantly online. All you have to do for yourself is read and understand the texts – at least, until now. By utilising artificial intelligence and methods to analyse human emotions, e-readers will be able to do a lot more in future:

- The letters automatically become a little larger when signs of fatigue become apparent.

- A quizzical way of looking at a word makes a definition – or translation – appear.

- If the reader’s concentration is starting to wane, then the entire text that follows is formulated in a more easily comprehensible way. All this is done to ensure the reader keeps reading for as long as possible.

This is – in part – still a long way in the future. However, as specialists in UX (“user experience”) have realised: Simply presenting users with an intuitive user interface based on familiar standards is no longer enough. Anyone who uses a device today has emotional expectations that keep changing over time. In future, the user interface will need to be able to keep up with these expectations. These expectations notwithstanding, wearables, for example, will require a completely new type of user interface. And language is nowhere near the last word on the subject.

Avoiding disappointments

In its study, “The Experience Revolution: Digital Disappointment – Why Some Consumers Aren’t Fans”, IBM established that 70 percent of customers are not satisfied with digital products. Their disappointment in virtual reality or in automatic voice control are stopping them from wanting to enter into a relationship with the brand a second time. Convenience, fast results and straightforward processes are decisive for turning a user into a fan of the brand. As well as an attractive price, of course.

Disappointment means: expectations were unable to be satisfied. But how is a device supposed to recognise expectations that ultimately depend on the emotional sensitivities of the customer? Professor Per Bergamin, Head of the Institute for Correspondence Courses and e-Learning Research (IFeL) in Brig, has spent years working on this question. His research unit, “Personalised and Adaptive Learning” aims to make it possible for students to benefit from individually adapted, screen-based learning experiences. “Our solution allows the level of difficulty of the study material to be adapted automatically to the progress that the student is making”, Bergamin explains. The system is constantly adding to its knowledge base in the background, and is capable of interpreting the level of a student’s knowledge by means of their answers. This is used as a basis for presenting the next round of material.

Bergamin provides a simple example: “The software can ask how the evaporation of ice functions. Or it can split this question up into ten individual questions which steadily guide the student towards the right solution.” In the past, Bergamin says, the progress made when learning has been determined using the corresponding questions. A painstaking business which demanded a lot of handiwork from the developers. However, now that they are in phase 2, the research team in Brig is working on an approach that also factors human emotions into the equation. “There are people who don't like answering questions, and these students should not be disadvantaged”, Bergamin underlines. Facial expressions are a potential way to obtain information about the level of the students’ knowledge. Is anyone looking confused, quizzical, or particularly zealous? The software interprets these feelings and then presents the corresponding material. Of course, this does require the student to have the corresponding hardware at their disposal.

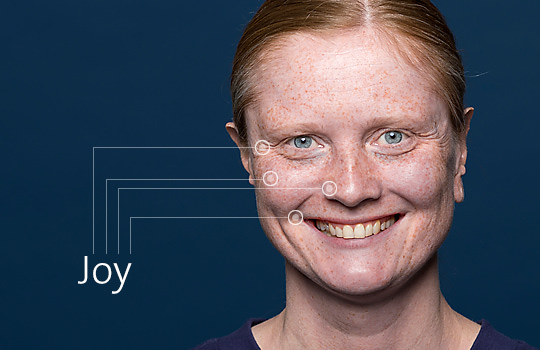

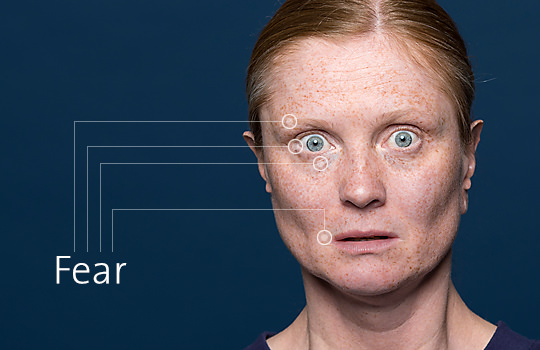

Sonar: More efficient that classic reports

But do people from different cultures actually express their feelings in a way that allows a machine to recognise them? When, for example, facial expressions are limited to the seven basic emotions described by Paul Ekmann, then this shouldn't be a problem. The expression corresponding to joy, anger, fear, disgust, sadness, surprise, contempt and shame is innate to every person, and can be identified worldwide. Whether the machine interprets the expression correctly in the corresponding context is another matter altogether. Is the student despairing because of having to answer the same stupid question for the third time running, or is it because he has not understood the question for the third time in a row?

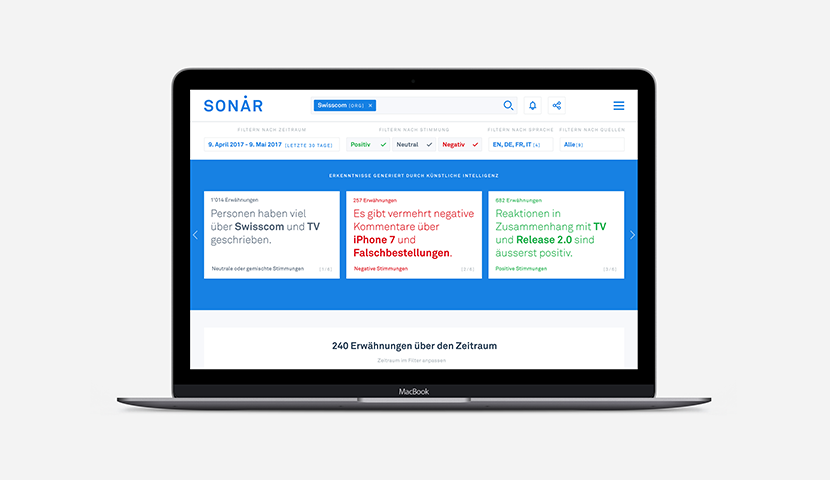

Recognising human feelings not only has increasing importance for user interfaces – its significance is also increasing for product development and marketing. “Sonar”, a solution developed by Swisscom, can recognise which feelings the customer is harbouring towards the company, or its products and services, in real time – and whether there are any storms gathering somewhere in Switzerland. “In a pilot trial, we are analysing different public Internet channels”, Marc Steffen, Head of Product Design, Artificial Intelligence & Machine Learning Group at Swisscom, explains. In itself, this is nothing new. Previously, however, the insights gained would have been combined, sometimes manually, into lengthy reports and be sent on to a number of interested parties – in the best case, as a dashboard; in the worst case, as a text document.

Today, Sonar analyses the reactions of customers in real time, showing what is being written and at the same time, evaluates whether the trends are positive, negative or neutral. “A product manager can now search for his product in Sonar and only needs to glance at it quickly to determine whether the corresponding customer mood in social media and comments columns tends to be positive or negative, and why”, Marc Steffen says. However, it first took an analysis of around 10,000 statements for the system, and allocation of the formulations to a state of mind, before the function had progressed this far. “This allows Sonar to learn what positive formulations are in different contexts, and what negative ones are.”

The emotion determines the content

The user interface of tomorrow won't have much in common with the way we use and manage programs and information today. The expectations of the user will increasingly determine what he sees and is presented. Artificial intelligence will make this possible, as it learns to interpret the emotions experienced by the user – or the customer – from their tone of voice, facial expression and the written word. This will result in some debate: Will the user even want to allow the service provider to see inside his mind, so to speak?

Per Bergamin accentuates that there are already devices available on the market for relatively little money which can, among other things, interpret brain activity. However, leaving others the freedom to decide this for themselves is a principle he adheres to: “Even today, the influence of our learning software automatically decreases as the learner’s level of knowledge increases, and instead of being instructional, tends to lend support, for example by providing additional information that can be used when needed.” Exactly where the line needs to be drawn still remains to be seen. However, Marc Steffen from Swisscom considers this to be clear-cut: “This area needs absolute transparency, and ultimately the user’s free choice as well. The user must be kept informed about what the service provider is doing, and must be able to decide against it at any time.”

Additional Information

Beware emotional robots: Giving feelings to artificial beings could backfire, study suggests: When chatbots are used in particular, the type of conversation is decisive. Strong emotions on the part of the machine have an unsettling effect.

Amazon Working on Making Alexa Recognize Your Emotions: Alexa aims to know when the user is irritated, and to apologise accordingly. This would be highly innovative for a computer.

Sonar recognises customer moods

Sonar, a system developed by Swisscom, gathers customers' opinions via different channels, for example Twitter, the comments on newspaper websites, RSS feeds or the service desk. Positive, negative or neutral content – Sonar analyses the mood automatically. In addition, the system recognises trends or themes or summarises customer feedback into a few sentences. Sonar makes it possible to recognise how a brand is perceived by customers. And as an easy-to-use web application it helps employees to understand their customers better and make informed decisions based on data.